|

This in-depth story took many hours of reporting, research, and writing to produce. To support Big Technology’s journalism, please consider upgrading at 20% off for your first year:

Can Demis Hassabis Save Google?

The DeepMind founder has a track record of insane AI breakthroughs. Can he do it within the Google mothership?

Demis Hassabis staresintently through the screen when I ask him whether he can save Google. It’s early evening in his native U.K. and the DeepMind founder is working overtime. His Google-owned AI research house now leads the company’s entire AI research effort, after ingesting Google Brain last summer, and the task ahead is immense.

Google’s core business is thriving, but that almost seems beside the point. Hassabis and I are speaking on Google Meet, in an interview arranged via Gmail, scheduled on Google Calendar, and researched via Google Search. Largely thanks to these core products, Google posted $307 billion in revenue last year, growing 13% in the fourth quarter, and is trading near its all-time high. But questions about its ability to win the AI race, or even competently run it, have clouded its recent success.

“I don't really see it like that,” Hassabis says, challenging the premise of my question. Artificial intelligence, he says, will “disrupt many, many things. And of course, you want to be on the cutting edge of that, influencing that disruption, rather than on the receiving end.”

Hassabis is the person who’s supposed to be keeping Google on that cutting edge. The award winning researcher and neuroscientist — who was just knighted on Thursday — has led a dynamic AI team within Google responsible for numerous breakthroughs. Since its 2014 acquisition, DeepMind has cracked a seemingly impossible board game with AlphaGo, decoded protein with AlphaFold, and laid the groundwork for synthesizing thousands of new materials, all via revolutionary AI models.

But Hassabis and the combined Google DeepMind team must now translate those types of breakthroughs into tangible product improvements for a $1.8 trillion company seeking a way forward in an increasingly AI world. And he must do it all without killing a search advertising business that serves up the lucrative blue links AI threatens.

Late on chatbots, rife with naming confusing, and with an embarrassing image generation fiasco just in the rearview mirror, the path forward won’t be simple. But Hassabis has a chance to fix it. To those who known him, have worked alongside him, and still do — all of whom I’ve spoken with for this story — Hassabis just might be the perfect person for the job. Perhaps even the future leader at the company.

“We're very good at inventing new breakthroughs,” Hassabis tells me. “I think we'll be the ones at the forefront of doing that again in the future.”

From Brains to Computers

Born in July 1976 to a Chinese-Singaporean mother and Greek Cypriot father, Hassabis began thinking about AI as a boy in North London. A young chess master with professional aspirations, Hassabis noticed at 11 years old that the electronic chess board he’d been training against had some form of intelligence inside, and grew interested in the tech. “I was fascinated by how this lump of plastic was programmed to be able to play chess,” he says. “I started reading some books about it and programming my own little AI games.”

After co-creating the hit game Theme Park at age 17, Hassabis went on to study computer science at Cambridge before returning to game development in his 20s. By then, rudimentary AI systems were growing ubiquitous in gaming and Hassabis decided he’d need to understand how the human brain works if he were to make a difference in the field. So he enrolled in a graduate neuroscience program at University College London and then did postdocs at MIT and Harvard.

“He was very smart, and in a different way than some of the other smart people I know,” says Tomaso Poggio, an MIT professor, computational neuroscience pioneer, and postdoc advisor to Hassabis. “It's not that he is technically a magician, in any one area — well, maybe chess — but he’s broadly smart about everything you can speak of. And it's very convincing, without any effort.”

One night, Poggio hosted Hassabis for dinner, and his student had an idea brewing for a new company that would employ lessons from neuroscience to advance the state of AI. Artificial brains could work similar to humans, he believed. And games could simulate real world environments, an ideal training ground.

After the dinner, Poggio asked his wife if they should invest in Hassabis’s new company and, having just met him, she told him to get in. Poggio became one of DeepMind’s earliest investors, though he wishes he’d given more cash to Hassabis. “It was a good thing to do. Unfortunately, it was not enough money,” he says.

In DeepMind’s early days, Hassabis executed the vision by running AI agents through game simulations. In doing so, he helped advance reinforcement learning, a type of AI training where you run a bot with zero instruction, giving it countless opportunities to fail so eventually it learns what it needs to do to win.

“They had an agent playing all the Atari Games,” says Tejas Kulkarni, an AI researcher who worked at DeepMind and is now CEO of AI startup Common Sense Machines. “This was the first time that deep reinforcement learning proved itself. It was like, holy shit. This is the place to be. Everyone's flocked there, including me.”

If Atari was an appetizer, AlphaGo was the main course. Go is a board game with more playable combinations than atoms in the universe, an “Everest” of AI as Hassabis calls it. In March 2016, DeepMind’s AlphaGo — a program that combined reinforcement learning and deep learning (another AI method) — beat Go grandmaster Lee Sedol, four games to one, over seven days. It was a watershed moment for AI, showing that with enough computing power and the right algorithm, an AI could learn, get a feel for its environment, plan, reason, and even be creative. To those involved, the win made achieving artificial general intelligence — AI on par with human intelligence — feel tangible for the first time.

“That was pure magic, says Kulkarni of the Go win. “That was the moment where people were like, okay, AGI is coming now.”

“We've always had this 20 year plan from the start of DeepMind,” says Hassabis, when asked about AGI. “I think we're on track, but I feel like that was a huge milestone that we knew what needed to be crossed.”

Enter OpenAI

As DeepMind rejoiced, a serious challenge brewed beneath its nose. Elon Musk and Sam Altman founded OpenAI in 2015, and despite plenty of internal drama, the organization began working on text generation.

Ironically, a breakthrough within Google — called the transformer model — led to the real leap. OpenAI used transformers to build its GPT models, which eventually powered ChatGPT. Its generative ‘large language’ models employed a form of training called “self-supervised learning,” focused on predicting patterns, and not understanding their environments, as AlphaGo did. OpenAI’s generative models were clueless about the physical world they inhabited, making them a dubious path toward human level intelligence, but would still become extremely powerful.

Within DeepMind, generative models weren’t taken seriously enough, according to those inside, perhaps because they didn’t align with Hassabis’s AGI priority, and weren’t close to reinforcement learning. Whatever the rationale, DeepMind fell behind in a key area.

“We’ve always had amazing frontier work on self-supervised and deep learning,” Hassabis tells me. “But maybe the engineering and scaling component — that we could’ve done harder and earlier. And obviously we’re doing that completely now.”

Kulkarni, the ex-DeepMind engineer, believes generative models were not respected at the time across the AI field, and simply hadn’t show enough promise to merit investment. “Someone taking the counter-bet had to pursue that path,’ he says. “That’s what OpenAI did.”

As OpenAI worked on the counterbet, DeepMind and its AI research counterpart within Google, Google Brain, struggled to communicate. Multiple ex-DeepMind employees tell me their division had a sense of superiority. And it also worked to wall itself off from the Google mothership, perhaps because Google’s product focus could distract from the broader AGI aims. Or perhaps because of simple tribalism. Either way, after inventing the transformer model, Google’s two AI teams didn’t immediately capitalize on it.

“I got in trouble for collaborating on a paper with a Brain because the thought was like, well, why would you collaborate with Brain?” says one ex-DeepMind engineer. “Why wouldn't you just work within DeepMind itself?”

DeepMind kept pushing its core research forward though. And in July 2022, its AlphaFold model predicted the 3D structures for nearly all proteins known to science. It was yet another major advance, and will likely fuel decades of drug discovery. Hassabis tells me it’s his signature project.

“We've had hundreds of thousands of biologists and scientists from around the world that are now tapping that database,” DeepMind chief business officer Colin Murdoch says in a Big Technology Podcast interview. The scientists are working on everything from antibiotic resistance to malaria vaccine development. It’s a massive breakthrough.

Then, a few months later, OpenAI released ChatGPT.

AI War & The Future Of Google

At first, ChatGPT was a curiosity. The OpenAI chatbot showed up on the scene in late 2022 and publications tried to wrap their heads around its significance. “ChatGPT is OpenAI's latest fix for GPT-3,” read an MIT Tech review headline digesting its debut. “It's slick but still spews nonsense.”

Within Google, the product felt familiar to LaMDA, a generative AI chatbot the company had run internally — and even convinced one employee it was sentient — but never released. When ChatGPT became the fastest growing consumer product in history, and seemed like it could be useful for search queries, Google realized it had a problem on its hands. Almost immediately, people began connecting it with the innovators dilemma. In spirit if not in name, a “code red” took hold within the company.

Peacetime was over at Google. And in the new AI war, its first major move was to combine the rival Google Brain and DeepMind teams under the Google DeepMind banner with Hassabis at the helm. Large language models take a tremendous amount of computing to run and train, and dividing the compute between two AI research divisions would hamper their progress. So from that standpoint, a merger made practical sense.

In Hassabis’s telling, AI research and products also began to collide to a point where it was logical to combine them. Whether solving protein folding leads to better search is still a bit uncertain, but Hassabis offers a worthy argument. Building a reliable science assistant, he says, would require solving AI’s hallucination problem in order to work. “If we solve that in that domain,” he says, “We could bring that into the core Gemini, and then solve it for chatbots and assistants too.”

“They're like this large semi truck that’s trying to move at the pace of a Ferrari”

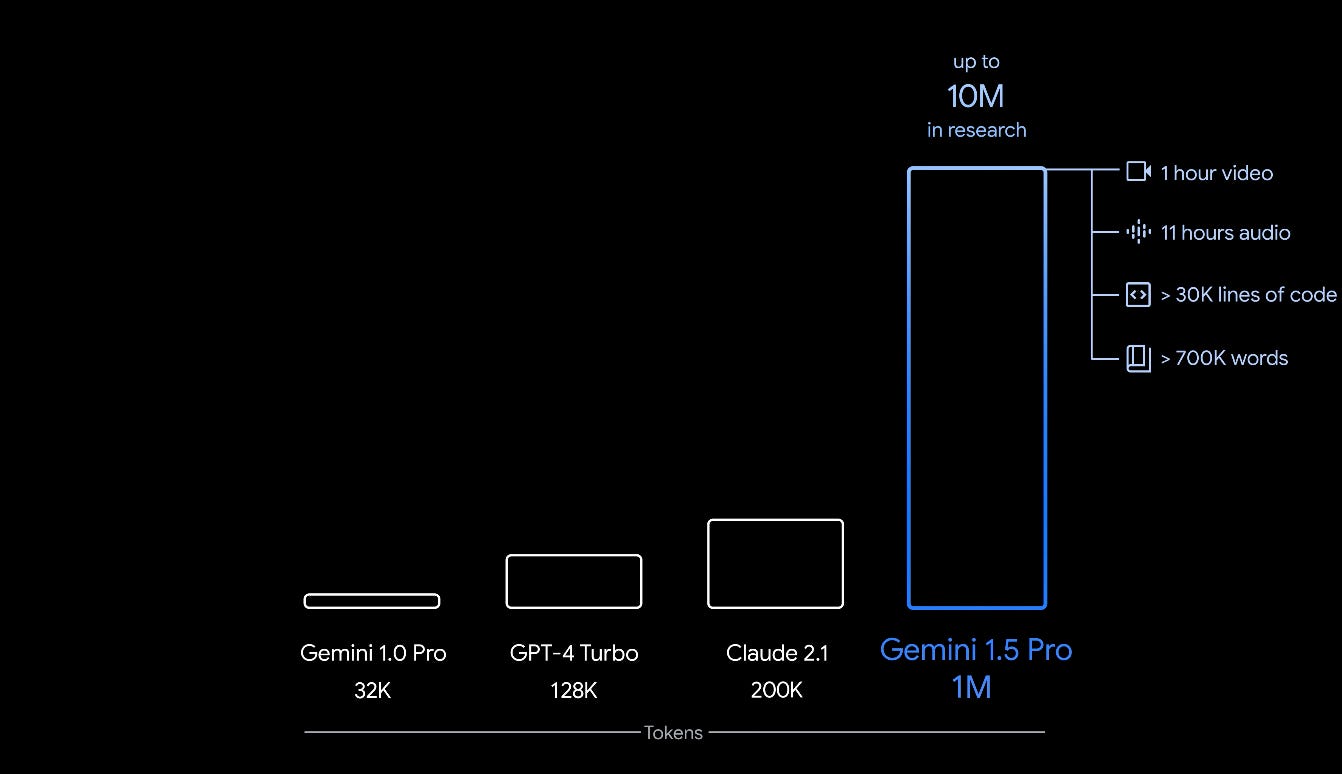

Gemini, the product Hassabis mentions, is Google’s answer to OpenAI’s GPT models. By most expert accounts, it’s on par with OpenAI’s technology. And in February, Hassabis and Google CEO Sundar Pichai announced Gemini 1.5, a large language model with context window of up to 1 million tokens. That’s enough to handle 1 hour of video, 11 hours of audio, or ten books worth of information. An effective counterpunch.

Few question Google DeepMind’s ability to produce great AI models, but those close to the company wonder whether it can navigate Google’s bureaucracy and translate that research into great products. To succeed, Hassabis will have to convince a conservative Google product organization to push his advances into production. And for a company that’s extremely hesitant to ship changes that might upset the equilibrium that’s made it successful, it’ll be no simple matter.

“They're like this large semi truck that’s trying to move at the pace of a Ferrari,” says Guarav Nemade, an ex-Google product manager who worked on LaMDA.

Google felt the pain acutely earlier this month as its Gemini image generator went off the rails, creating historically inaccurate images, including some portraying Nazis as people of color. It was an embarrassing episode and largely a product of organizational dysfunction.

When I asked my sources what Hassabis had to do be successful, nearly all wanted to know whether Google would give him the remit to push dramatic — even painful — changes within the company’s products to move AI forward. Hassabis tells me he’s still on the research side and not sitting in product meetings, but that his work is now deeply entwined with Google’s product organization. “We’re increasingly connected with the product units,” he says. “There's been a huge demand in the last couple of years for brainstorming ways those technologies can help with the product features.”

AI’s Next Step

As chatbots expand beyond conversational partners — becoming agents that take action on our behalf — Hassabis’s fundamental research is poised to play a leading role. OpenAI is already developing agent software that autonomously takes action, and Hassabis says DeepMind is working heavily on this too.

“We were steeped in agents from the beginning, right? That’s what all of our games work is,” he says. “We believe that agent systems are actually what you need for intelligence.”

Just like AlphaGo mapped out its environment using Hassabis’s beloved reinforcement learning, AI agents could use similar technology to map out our world and take action on their own. It’s a big step beyond today’s conversational models, which require users to initiate the interaction and only then deliver information. When Hassabis talks about the possibilities of this full circle moment, he lights up.

“We believe that agent systems are actually what you need for intelligence.”

“The next step is for these systems to do things for you, solve things for you, book holidays, restaurants, whatever. You can give them goals, and so on,” he says. “We're experts in doing that.”

If Hassabis nails the assignment, he may face new questions, including whether he should run Google itself, not just its AI research. Success here would mean returning Google to AI leadership, a feat given where it stands today. Many of those who know Hassabis pine for him to become the next CEO, saying so in their conversations with me. But they may have to hold their breath.

“I haven't heard that myself,” Hassabis says after I bring up the CEO talk. He instantly points to how busy he is with research, how much invention is just ahead, and how much he wants to be part of it. Perhaps, given the stakes, that’s right where Google needs him. “I can do management,” he says, ”but it's not my passion. Put it that way. I always try to optimize for the research and the science.”

Douglas Gorman contributed reporting

WorkOS, the modern identity management for B2B SaaS (sponsor)

WorkOS provides flexible and easy-to-use APIs for authentication, user identity, and complex enterprise features like SSO and SCIM provisioning. Recently, WorkOS had its first launch week, unveiling exciting new features like Sessions, Roles, and Impersonation to provide a complete user management platform for modern apps.

It's a drop-in replacement for Auth0 and supports up to 1,000,000 monthly active users for free.

What Else I’m Reading, Etc.

The Atlantic has 1 million subscribers and is profitable [WSJ]

Top podcasters are gaming the charts by trading mobile game points for follows [Bloomberg]

Podcaster Andrew Huberman had multiple relationships in parallel [New York]

The U.K. marks its wealth of unicorns with an ever-changing statue [Venturebeat]

New York prisons will lock down during the solar eclipse [Hell Gate]

Quote Of The Week

He will never own a home or have a retirement account ,and in some states, he’ll never vote. He will forever be scrutinized by the federal government. SBF will never work in his industry again. It’s unlikely he’ll ever hold an officer position unless it’s within his own company, which, if he manages to build, would have a lien placed against it by the government, particularly if it has tangible assets.

A white collar criminal’s note about what Sam Bankman Fried faces from here

Number of The Week

25

Years in prison Sam Bankman Fried was sentenced to this week after scamming FTX users. He was also ordered to forfeit $11 billion.

The Yahoo Episode — With Jim Lanzone

Jim Lanzone is the CEO of Yahoo. He joins Big Technology Podcast for the long awaited Yahoo Episode, a deep dive into a company that remains one of the most visited and influential property on the web. Tune in as Lanzone describes how Yahoo's verticals operate, how the company thinks about generative AI for its search bar, and whether it's still possible to build a solid business on the web. Ranjan Roy joins us as well for a rare Wednesday appearance on the podcast. Tune in for a deep, engaging conversation with a CEO at the helm of a crucial internet cornerstone.

You can listen on Apple, Spotify, or wherever you get your podcasts.

Send me news, gossip, and scoops?

I’m always looking for new stories to write about, no matter big or small, from within the tech giants and the broader tech industry. You can share your tips here I will never publish identifying details without permission.

Advertise with Big Technology? write alex@bigtechnology.com or reply to this email to learn more

Thanks again for reading. Please share Big Technology if you like it!

And hit that Like Button if you like high quality journalism like this

My book Always Day One digs into the tech giants’ inner workings, focusing on automation and culture. I’d be thrilled if you’d give it a read. You can find it here.

Questions? Email me by responding to this email, or by writing alex.kantrowitz@gmail.com

News tips? Find me on Signal at 516-695-8680

Thank you for reading Big Technology! Paid subscribers get this weekly column, breaking news insights from a panel of experts, monthly stories from Amazon vet Kristi Coulter, and plenty more. Please consider signing up here.