|

Big Technology subscribers enable us to publish stories like today’s breakdown of OpenAI’s new reasoning models. Subscribe today to view all Big Technology reporting, avoid the paywall, and to support independent tech journalism:

Is OpenAI’s New “o1” Model The Big Step Forward We’ve Been Waiting For?

The new model can reason through really hard problems. Some will see its promise before others.

On Thursday, OpenAI released “o1,” a new AI model that can reason through hard problems by breaking them down to their component parts and handling them step by step. Released in two iterations, o1-preview and o1-mini, the model is available to all ChatGPT Plus users, with a broader release to follow.

The o1 release is the first of OpenAI’s “Strawberry” AI reasoning project (originally called Q*), which the company believes is a major step forward for the field. “We think this is actually the critical breakthrough,” OpenAI research director Bob McGrew told The Verge this week. “Fundamentally, this is a new modality for models in order to be able to solve the really hard problems that it takes in order to progress towards human-like levels of intelligence.”

After trying the new o1 models myself, and analyzing the documentation, I’m already impressed, but still have some pretty big questions. Here are my critical takeaways from the release, including whether it is indeed OpenAI’s long anticipated big step forward:

A step change in AI?

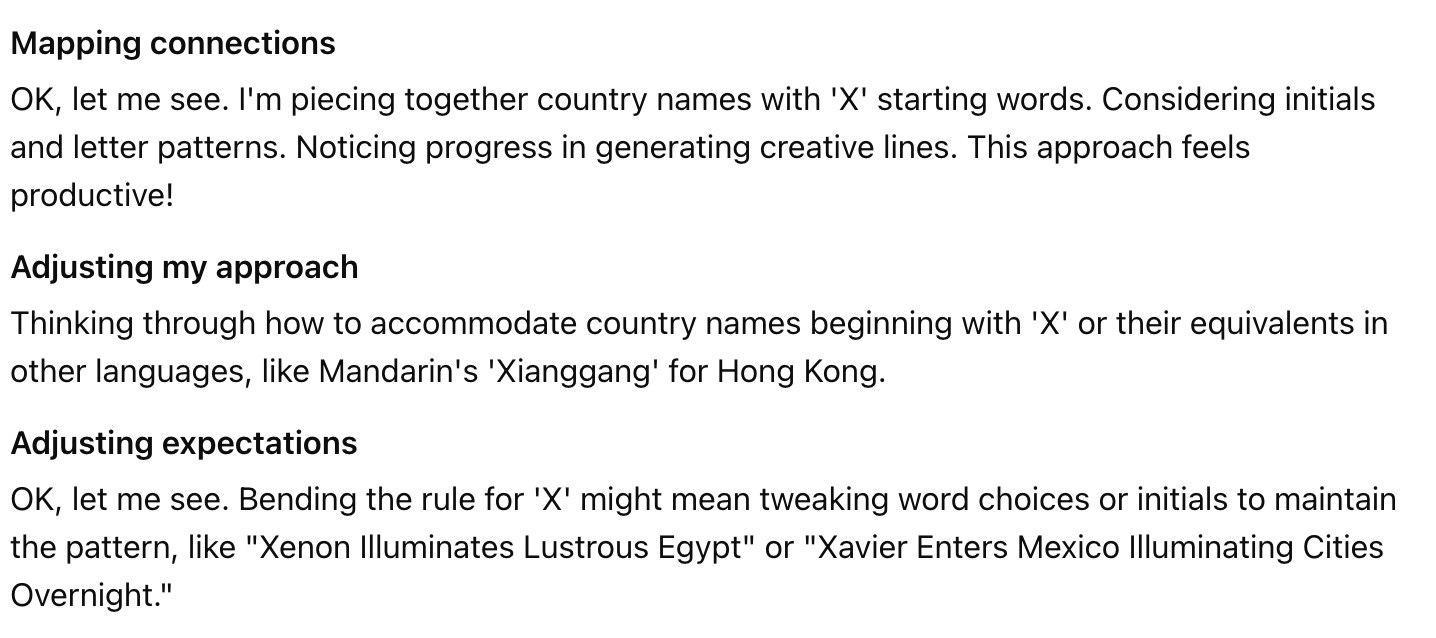

OpenAI’s o1 models write out their chain of thought as they work through your queries, showing how they ‘think’ through the problem before delivering an answer. I asked o1-preview, the most powerful model available, to write a poem with 14 lines, spelling my name out with the first letter of each line, and spelling a country name with the first letter of the words in each sentence. The model ‘thought’ for 59 seconds, handling some rows easily but trying hard to find a country that started with the “X” in my first name. Eventually, it realized it couldn’t answer that part satisfactorily, but it nailed the rest of the poem. “A unique star travels radiantly in autumn,” it began, using the “A” in Alex to start the poem and spelling out Austria across the line. I gave Anthropic’s Claude the same prompt and it failed.

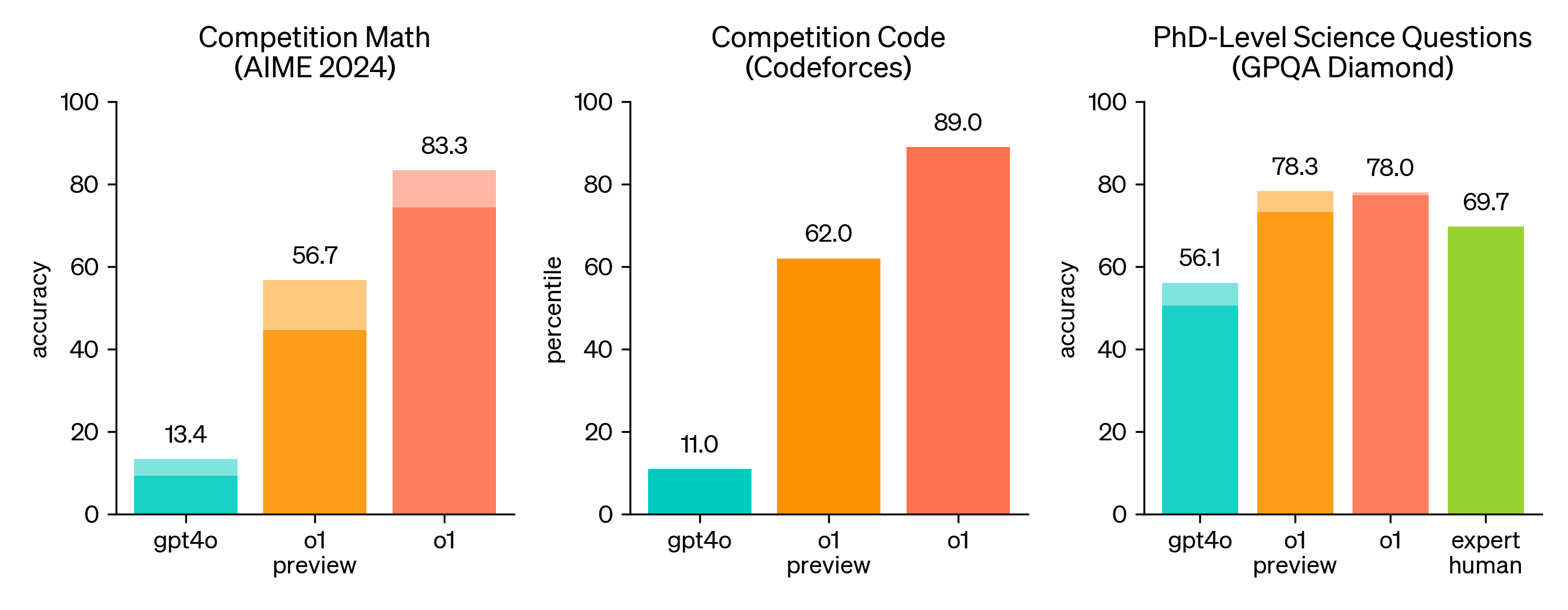

The o1 model’s ability to handle these multi-step, complex tasks suggests OpenAI has once again advanced AI’s state of art, but the magnitude of the advance will still take some time to determine. Though o1 exceeds existing benchmarks in coding, math, and science, its ‘chain of thought’ can feel like a party trick in other cases. In a best case scenario, o1 is a step on a path to something potentially bigger.

More of a math and science thing

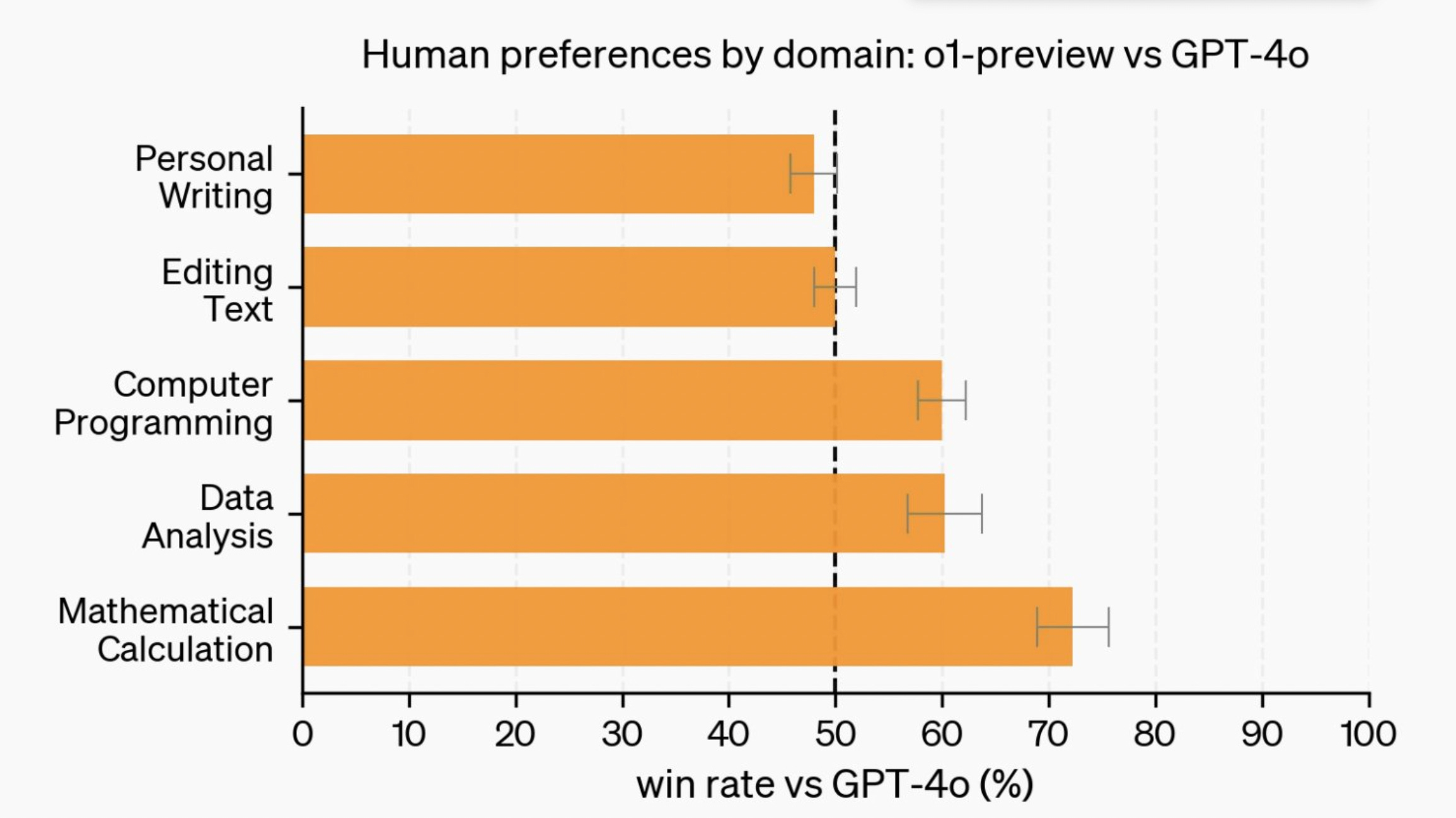

These new models will likely create a divergence of public opinion on AI. People who use AI for writing, editing, and marketing tasks will likely be disappointed. But people that use it for coding, math, and science research will be thrilled. In OpenAI’s testing, people who used o1 for writing actually preferred it less than GPT-4o. But those who used it for mathematical calculation, data analysis, and computer programming preferred it by a wide margin.

“Words people’ who write about this technology might therefore be more negative about it, given their subjective experience, as ‘math people’ using it in its best use case see its full capabilities. That could create more negative perceptions of the technology than merited, something that bears watching as OpenAI pushes ahead toward a $150 billion valuation.

Chat vs. Work

To get the most out of reasoning models, you may have to assign them work as opposed to chatting with them. Scott Stevenson, CEO of Spellbook, an AI legal assistant, said the bot is good at taking long sets of instructions and using them to modify legal documents. “When people are underwhelmed by o1, I think it’s because they’re thinking of it as chat still,” Stevenson said. “Its ability to *do work* is going to be really good.”

If this sounds like a step toward AI agents to you, it does to me as well. As OpenAI licenses this technology, it’s inevitable that companies will attempt to build AI agents with it. Still, despite the buzz, so-called ‘agentic AI’ appears far off.

OpenAI’s Competency and Focus

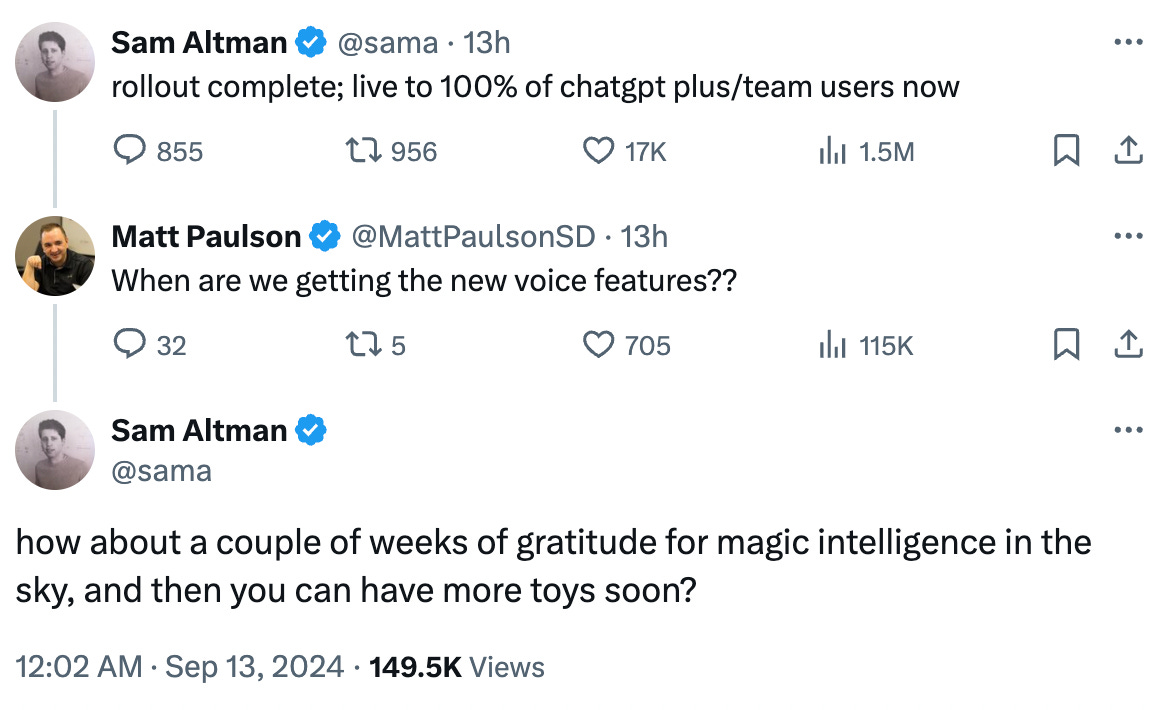

We learned a few things about OpenAI with this release. First, despite rumblings that the company was lost amid top staff exits, including its chief scientist Ilya Sutskever, OpenAI showed it can still push AI’s cutting edge forward. Second, OpenAI putting this release ahead of other projects (Where’s Sora? GPT-4o voice?) may indicate it’s found some focus, and is pushing hard on an approach it believes in. Sam Altman suggested as much in a response on X to one frustrated user. “How about a couple of weeks of gratitude for magic intelligence in the sky, and then you can have more toys soon?” he wrote. For OpenAI, which is doing a lot at once, some focus would be welcome. And it’s definitely better than the alternative explanation, that Sora and GPT-4o voice are potentially failing.

More on Big Technology Podcast

I’m about to publish an episode on OpenAI’s o1 with Bloomberg’s Parmy Olson, who’s book on the AI arms race, Supremacy, is out this week. You can find it on Apple Podcasts, Spotify, or your podcast app of choice.

Lighting the Halo of Innovation at LG NOVA InnoFest 2024 (sponsor)

LG NOVA’s fourth annual InnoFest will take place at the Place of Fine Arts in San Francisco September 25-26. The event provides attendees with the unique opportunity to join in the discussion on the future of innovation in the HealthTech, AI, CleanTech, Life Sciences, Smart Life and Mobility ecosystems.

Register today and come be inspired and energized to build a better tomorrow.

Advertise on Big Technology?

Reach 145,000+ plugged-in tech readers with your company’s latest campaign, product, or thought leadership. To learn more, write alex@bigtechnology.com or reply to this email.

What Else I’m Reading, Etc.

Big Technology’s dispatch from China on the state of the country’s booming EV market [Big Technology]

Wharton professor Ethan Mollick’s early impressions of o1-preview [One Useful Thing]

NVIDIA CEO Jensen Huang speaks plainly about others’ reliance on his company [Yahoo]

Apple’s iPhone16 launch wasn’t that exciting [Reuters]

JD Vance says Apple sometimes benefits from slave labor in China [CNBC]

Sergey Brin is working at Google almost every day now [Techcrunch]

Management at Saudi Arabia’s Neom flagship project is a disaster [WSJ]

Quote Of The Week

“Meta is a good name.”

Mark Zuckerberg, who is done apologizing, says he has no regrets renaming Facebook after the metaverse.

Number of The Week

$150 billion

OpenAI’s new fundraising effort now has the company looking at a $150 billion valuation, far higher than the $100 billion valuation previously reported.

This Week on Big Technology Podcast: Apple's iPhone16 Debut + Apple Intelligence Delays

Ranjan Roy from Margins is back for our weekly discussion of the latest tech news. We cover 1) Apple's iPhone16 launch 2) Apple Intelligence disappointment & delays 3) Apple's future runs through Siri 4) Apple Intelligence's impact on iPhone16 sales 5) Will the iPhone17 spark a supercycle? 6) Does Apple Intelligence compete with apps? 7) Apple's reliance on its services business 8) Is Apple a software company now? 9) Risks of Apple's software reliance 10) What happened to the Vision Pro?

You can listen on Apple, Spotify, or wherever you get your podcasts.

Thanks again for reading. Please share Big Technology if you like it!

And hit that Like Button for more on the cutting edge of AI

My book Always Day One digs into the tech giants’ inner workings, focusing on automation and culture. I’d be thrilled if you’d give it a read. You can find it here.

Questions? News tips? Email me by responding to this email, or by writing alex@bigtechnology.com Or find me on Signal at 516-695-8680

Thank you for reading Big Technology! Paid subscribers get our weekly column, breaking news insights from a panel of experts, monthly stories from Amazon vet Kristi Coulter, and plenty more. Please consider signing up here.