Artificial intelligence had a chaotic week.

The board of directors at OpenAI, which created ChatGPT, fired its wunderkind CEO, Sam Altman. Then, Microsoft hired Altman, along with OpenAI’s president and co-founder, who had quit in solidarity (a bunch of employees threatened to quit, too). Then, OpenAI reinstated Altman as CEO. And now, the board is being overhauled. All within a week.

If you’re wondering what this means for AI, it was a sideshow. A soap opera. The real work around AI continues, uninterrupted, at mid- to large-size companies implementing it across the world and at dozens of large and small tech companies delivering their own AI solutions. Still…

The saga brings up important questions.

Why was OpenAI’s board so concerned about their beloved CEO that they fired him?

One possible answer: This was a conflict about ethics. Perhaps Altman had the company building AI that could be misused, or worse, overtly harm humanity. OpenAI began as a non-profit dedicated to building AI in a responsible, humanity-enhancing manner, with a focus on managing the sci-fi–like downsides of an unfettered sentient computer system. Perhaps the board feared Altman had steered the company too far away from that.

I don’t know if that’s why they fired him, but others share the thesis. Regardless, it brings up a bigger question more of us should consider.

Who’s in charge?

Technology always operates on the frontier, pushing boundaries and resisting government oversight. This is true, even when tech leaders claim the opposite. Sam Bankman-Fried (of failed FTX) or CZ of Binance often said, “We welcome regulation” when their actions said otherwise.

I’m no fan of rampant government regulation, but I often find myself asking, “Why do we let a bunch of 20- and 30-year-old tech savants disrupt our world with no constraints and no accountability?”

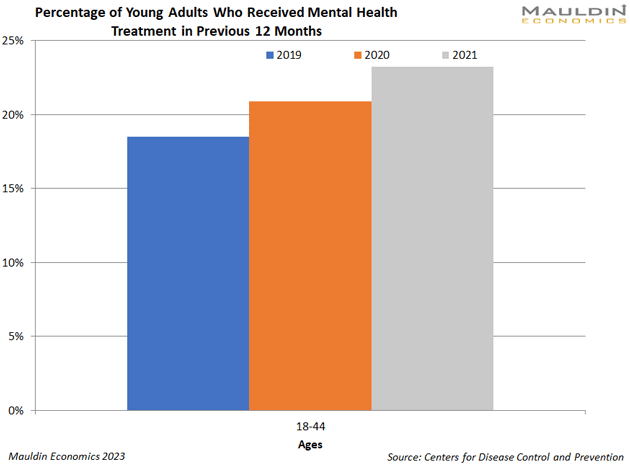

Does anyone doubt that social media has harmed teenagers and twenty-something adults? If you do, you probably don’t have children that age. Youth anxiety levels are abnormally high. I know several bright and talented young people raised by loving parents who are periodically knocked off course by debilitating bouts of anxiety.

Source: CDC

Scott Galloway, a marketing professor at NYU and host of so many podcasts I can’t keep track, has been calling attention to the growing loneliness epidemic in the US. So has the US Surgeon General. It turns out loneliness in the age of “social” media is shockingly high.

Source: profgalloway.com

I’m sure COVID didn’t help, but these problems were in place before the pandemic. They started right about the time Facebook became popular. Active Facebook users jumped from 20 million in 2007 to 1.01 billion in 2012. Anxiety disorders in children and teens jumped 20% during that stretch. Correlation isn’t causation, but…

I don’t know a single person who feels social media is an overall good thing. And yet, we use it because we think we have to. Even I, who mostly hates social media, will ask you to follow me! Why? Because that’s where the eyeballs are.

Social media has altered the social fabric. AI could have an even bigger influence. It has massive potential, with a side of existential downside risk.

I’ve been in business management most of my career. I love the business of doing business. Solving problems, growing the P&L, building a fortress balance sheet, collaborating with smart people. This is the lens through which I view AI, and I’m excited about it. We’ll use it in our business, and we’ll invest in businesses that leverage AI to boost productivity. As I wrote last week, there is tremendous opportunity here.

But let’s not kid ourselves. Something could go very wrong with AI—just like it did with social media. We’ve screwed an entire generation, and no one seems to be accountable. It was a slow-moving train wreck, and it was intentional. Our children were monetized by a bunch of unrestrained twenty- and thirty-year-old techies.

At the risk of sounding like an old man ranting, let me say, techies should push boundaries. Twenty- and thirty-year-olds should push boundaries. Reach! That’s how you break out, how you make your careers grow and thrive. That’s where innovation comes from. I’m not criticizing that group. I know many and cheer them on.

All I’m saying is there should be some guardrails. And this is where it gets really hard. Not many people think the government can do it. I’m in that camp. Yet there are risks here that people are overlooking, and they are too big to ignore.

I suspect OpenAI’s board of directors came to the same conclusion and tried to do something about it. They were pushed aside. We should ask if that was the right thing to do.

What could go wrong with AI? A lot.

Best regards,